- Amal Dorai

- Jun 6, 2023

- 4 min read

After years of anticipation, today Apple finally announced its entrance into the XR market with the launch of its Vision Pro headset.

This is a big moment for wearable technology, and our investment thesis at Anorak Ventures. We've been focused on XR since Greg's 2013 investment in Oculus. We’ve invested in XR gaming (Rec Room/Virtex), enterprise collaboration (Arthur/Resolve), K-12 education (Prisms of Reality), secondary education (Osso VR/Moth&Flame), healthcare (Innerworld/Karuna), infrastructure (Vntana), and other adjacent areas. We’re big believers that XR, as a medium, can improve the way that people work, learn, relax, and most importantly, connect with each other over distance.

Here’s why we are bullish on the Apple Vision Pro, and the broader XR market, after today’s announcement:

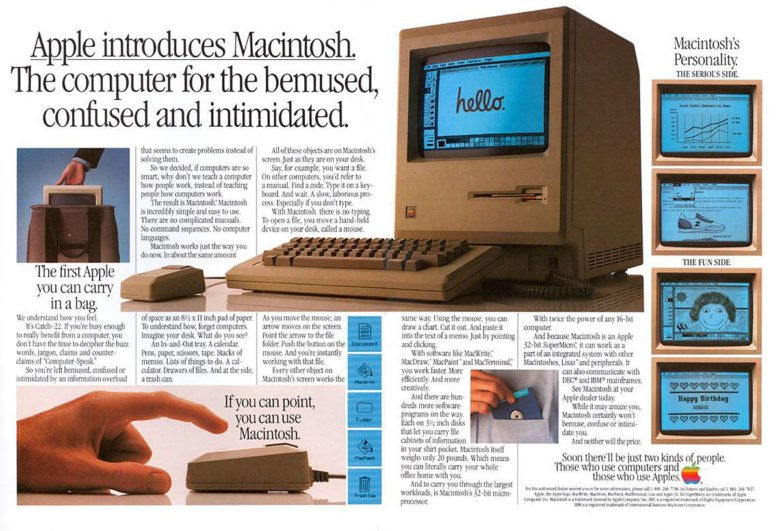

Apple is aspirational - Nobody is better than Apple at making technology cool. It stems from a combination of human-centric design, product quality, iconic marketing, and perhaps a bit of lingering charisma from Steve Jobs. Google attempted AR with their Glass product but it backfired as users were labeled Glassholes. Meta has spent billions to widen VRs appeal, but their user base remains a narrow demographic. With $40B of wearable revenue last year (one-fifth of Microsoft’s total revenue!), Apple is one-of-a-kind at bringing products from niche to mainstream.

Competition is good - Meta is currently the only viable option for XR at scale. Startups are, and have been, trying to bring new headsets to market, but the capital intensive nature of novel hardware makes it difficult to succeed. Customers benefit when companies are forced to compete. Innovation accelerates and prices are driven down. Regardless, if the Vision Pro succeeds, consumers will benefit from today's announcement.

Flagship experience - Like Tesla, whose first product was a $140,000 two-seat convertible, Apple is entering the market with a high-end, no-compromise device that has impressed everyone lucky enough to try it. Meta chose to subsidize the cost of headsets to gain adoption. The $3,500 price point of Apple’s headset shows conviction in its ability to produce amazing products. The Vision Pro will initially be inaccessible to most, but like the iPhone, over time will come to be seen as indispensable while the price will continue to drop. Furthermore, the 525 Apple Stores around the world will be a valuable channel to demo this amazing new technology to millions of people, creating pent-up consumer desire even for people who can’t yet afford it.

Marketing to the masses - There was a notable difference between how Apple marketed their headset today versus Meta. Absent from Apple’s presentation was an excess of technical jargon and a deep dive on the technology; instead Apple focused on more familiar and practical use cases that users could easily grasp. Mark Zuckerberg’s fascination with the technology has long been reflected in Meta’s marketing efforts, but likely hindered XRs appeal to the masses. One of Apple’s strongest attributes is their ability to market to the masses by portraying an intimately familiar experience, rather than by emphasizing cutting-edge science-fiction technology.

One OS to rule them all - Complete control over both the software and hardware gives Apple a distinct advantage in XR – Apple is uniquely positioned to bridge the most immersive interactions (in XR) with the most frequent interactions (on iPhone). Apple's tight iOS/MacOS/iPadOS integrations and seamless blending of the physical and digital worlds make Vision Pro a very familiar and welcoming environment. Putting on a Meta Quest 2 today is disorienting – Apple is painting an entirely different vision for XR where it can seem fully immersive, or not at all immersive, with a literal control dial for the user to set their immersivity preference.

Apple’s play for the enterprise - Most Apple products do not highlight enterprise use cases, but the Vision Pro video prominently showed use cases in remote work and prosumer applications, positioning the headset as a companion device for business travelers and an at-home device for immersive remote work. The design has a waist pack making it more comfortable for long sessions. To us, this signals a commitment to the power user - the user who will spend an extra minute getting the headset ready because they’re planning on using it for 3 hours, not 3 minutes. Improved ergonomics will be important for enterprise usage.

Pioneering 2D/3D interaction model - Apple’s core experience is focused on a more familiar 2D interface and retains a direct connection to the user’s physical surroundings. Familiar applications like Photos, FaceTime, and Keynote, can be tiled and arranged in an immersive 3D space. Apple realizes that very few people have tried XR or feel that they have a need for it, and is shipping the Vision Pro with a iPad-esque experience that feels familiar to users and allows the huge installed base of iOS apps to run on Day 1. It’s also less intimidating for users, who aren’t thrown into a completely virtual world which can be disorienting.

Anorak Ventures is tremendously excited for Apple to bring XR to market, and then, over time, to the mass market. The visionary founders of our VR/AR portfolio companies have seen this world coming for years and even decades, and there’s no better company than Apple to now show the world what this technology can do.

Contact: amal@anorak.vc